After more than 40 years of operation, DTVE is closing its doors and our website will no longer be updated daily. Thank you for all of your support.

Sports streaming goal: total engagement

While broadcast-like low latency and QoE are table stakes, streamers and rights holders are moving into interactive and personalised live applications to bring the future of sport to fans.

You can argue that sports organisations are keeping one leg in the more lucrative, mass audience and advertiser friendly broadcast camp but the transition to streaming is happening at pace as they seek further monetisation of rights and chase a shifting consumer base. Streaming services and tech giants, meanwhile, are looking to live sports as a differentiator to fight competition, reduce churn or are as a loss leader for device sales or ecommerce.

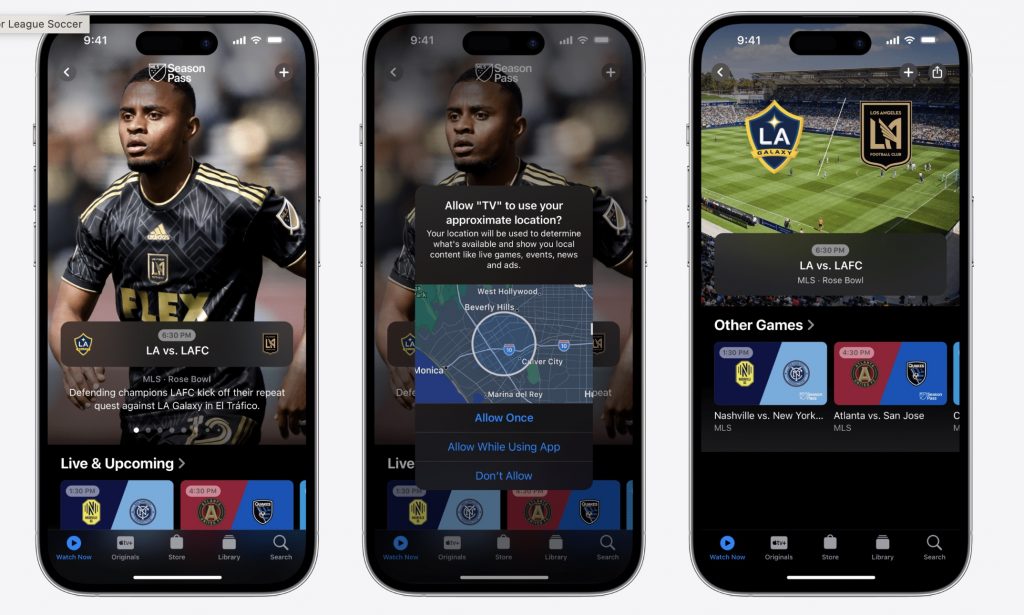

Examples of this emerging relationship include Amazon’s exclusive Thursday Night NFL (TNF) games for $1bn a year through to 2033 (though the bulk of NFL games are on the major networks for another decade); and Apple’s 10-year, $2.5 billion Major League Soccer package which begins in 2023 and will see every one of the league’s games available on a new MLS service. This is important because of its a total shutout of traditional channels.

But if streaming really wants to unseat linear TV as the favoured way of watching sport, then its technical infrastructure needs to be watertight – and that’s still not always the case.

CBS’ All Access app crashed at the start of the 2021 Super Bowl. Even Amazon couldn’t prevent leaving viewers frustrated by poor video quality and buffering on occasion of its TNF debut when San Diego Chargers played Kansas City Chiefs.

To compound issues for the NFL, users trying to add NFL RedZone via YouTube TV’s Sports Plus package received error messages while the league’s own new DTC NFL+ service had usability issues including fast-forward malfunctions.

“When millions of viewers are watching a live sports event at the same time, scaling the delivery is the major challenge,” says Xavier Leclercq, vp business development, Broadpeak. “While it’s easy to serve a small audience from a cloud provider or a global CDN, it can be complex to scale to millions of concurrents who all want the content in high quality across a variety of networks and devices.”

Most everyone agrees on the same suite of infrastructure required to ensure high-quality coverage. We’ll take Ateme’s schema:

- Broadband networks (fibre, 5G) with edge-computing capabilities and enough bandwidth for high-quality and high-resolution video and audio

- Highly distributed, elastic CDN that caches content close to viewers and adapts to traffic peaks

- Dedicated video-delivery chain combining encoding, packaging, CDN and player optimisations.

- Efficient video compression platforms for outstanding picture quality at the lowest possible bitrate.

“Providing high-quality coverage requires delivering exceptional video quality to the large screen and to every user, at scale, with low latency and without stream interruption,” says Alain Pellen, Snr market manager, OTT and IPTV, at Harmonic. “To achieve that for a premium event, all elements of the chain are important, including the live support provided by vendors. On-premises contribution encoding, networking, cloud transcoding, origin, CDN and the player/app solutions determine the video quality (which should be at least 1080p 60 for premium sports), the end-to-end latency (which is expected to be similar to broadcast) and enable scaling. On top of that, source redundancy, seamless cloud geo-redundancy and multi-CDN are required to guarantee 99.995% uptime or better.”

Addressing latency

That’s a lot of moving parts but addressing the issue of scale and latency is a priority for live streamers. Audiences are increasingly less forgiving, and top-tier sporting rights owners are no longer tolerant of streaming services that are less performant and potentially impacted by broadcast services.

“Taking latency out of the final delivery to viewers’ devices enables folks across platforms to experience the live action all at the same time — which in a world of second screen, third screen and social viewing experiences, is more critical than ever,” says Rick Young, SVP, head of products, LTN Global.

“Consumers are paying considerable subscription costs for services specifically for live content, so the expectation is that the service level matches what they would find on long-established platforms. And, if something does go wrong, word spreads quickly because of the prevalence of social platforms.”

Glass to glass delay is benchmarked differently depending on application. Broadcast equivalent latency is generally accepted around six seconds. Another measure matches live video up to the time it takes to post a ‘Goal!’ to social media. The ultimate is near realtime data feeds required for betting.

“Streaming video will always carry an element of delay,” says Chris Wilson, director of market development, Sports, MediaKind, “but the norm is around the six second mark.”

The industry has addressed the issue with streaming protocols like low-latency HLS/DASH and CMAF. with HTTP/1.1 Chunked Transfer Encoding to eliminates unnecessary delays between, for example, a packager and a CDN.

“While a necessary step, these protocols alone are simply not enough to bring down latency during popular events when networks may be congested due to high audiences,” says Leclercq.

Broadpeak’s solution lies in making video delivery more deterministic: the more likely the content is to arrive on time, the smaller the buffer needs to be in the playback devices.

This is achieved by serving content from within the ISP networks, explains Leclercq: “One option is to multiply the number of CDN locations inside the ISPs, so content only travels a tiny distance to get to the viewers. Another is to leverage multicast for reliable delivery. Technologies like multicast ABR are being adopted by large ISPs to scale their live streaming offerings.”

Everyone is working to deliver reliable experiences at scale and that’s tricky given providers have no control over network conditions.

“CMAF also increases network load by multiplying the number of http requests. or the same length of content, there will be many more ‘chunks’ to request,” says Arnodin who points to Byterange a technique designed to limit the number of requests sent to the CDN.

This limits the number of HTTP requests, frees up some bandwidth, and makes interaction between the player and the CDN considerably more efficient. Moreover, as delivery would work the same way for both HLS and DASH, it’s possible to perform media chunk sharing between the two protocols and effectively halve the network bandwidth.

Latency however cannot be tackled in delivery alone, and in some cases it’s necessary to buffer a feed. An operator with both broadcast and OTT offerings, for example, will want both services to be synchronised.

“In a centralised (remote production) model, which is very much the trend going forward, ensuring you have reliable backhaul transport from the venue to the facility hub or cloud is crucial,” says Young. “Those feeds need to be synced and low latency. Once the show is ready for distribution, that same reliability and low latency is critical to delivering the feed either to distribution partners like MVPDs, broadcast facilities, or CDNs.”

Careful consideration needs to be given to the synchronisation alternate cameras fed straight from an event and viewed alongside programming, and production feeds that would be subject to additional delay. This also highlights the need for a predictable and repeatable delay that all feeds can be timed against.

“QoE is about understanding proper sync at source through to playback to ensure that viewers of the same service on different devices can swap between views and be at the same point in the video,” says Wilson. “You want to reduce all latency in the chain from contribution to delivery. So, when we do event based live streams we tend to focus on optimising the latency in the components we can control – notably the software for encoder and packager.”

MediaKind call this ‘direct path technology’ and claim it radically changes the way data is connected from the encoder to the packager, helping to play a huge role in cutting the time needed to move content from one video processing function to the next.

“There’s no point only having a low latency CDN or encoder if the other elements in the ecosystem are not low latency,” concurs Synamedia VP product management, Elke Hungenaert. “That’s why at Synamedia all components in the pipeline, from capture to playback, are low latency for optimal results.”

Synamedia recently launched ‘Application Tailored Latency’ for its Fluid EdgeCDN. Hungenaert says, “ISPs and infrastructure providers can, for the first time, switch between latencies within a streaming service. This makes it possible to launch low-latency interactive services, such as multi-view, betting and watch parties, while keeping infrastructure costs under control.

Opening up the sports book

For betting or gamification applications, the latency needs to be synchronised and virtually realtime with the actual event so viewers in the stadium or at home can bet. Realtime is often required by regulations surrounding the sport.

Some challenges are not necessarily technical. “For instance, if you’re a non-specialist sports streamer then there may be issues over audience acceptance of betting,” says Sanjay Duda, COO, Planetcast International. “It’s much easier for a specialist sports streamer to launch betting. Further, betting is treated with mixed feeling in a number of countries. In India, for example, there are severe limitations to public betting. However, rights holders are finding adjacent engagements such as fantasy sporting and skill-based gaming where points are linked to performances or game outcomes.”

Betting (sportsbook) is particularly heated in the US following the relaxation of permitting sports gambling. “The only conversation [there] is how to maximise the sportbook and betting rights,” says Wilson. “Betting is the uber use case. It unlocks huge amounts of revenue.”

According to a recent report by Statista , the global sports betting market is expected to reach $114.4bn by 2028, which is just over 86% growth compared to 2021 driven by the increasing popularity of online and mobile betting.

However, attaining ultra-low latency is not cheap, and the cost of implementation may outweigh any direct benefit particularly for large-scale events such as the Super Bowl.

“If a huge event did stream in ultra-low latency to everyone the internet wouldn’t cope,” says Wilson. “Even if there were enough silicon in the world to do it, the cost would be prohibitive.”

He suggests though that rights holders could enable ULL for a limited number of paying customers, those who actually want to bet, while keeping the main feed to the broadcast-standard 6 secs.

“This would control cost and unlock a different tier of engagement but to get there you’d have to pay and this would be part of the commercial strategy of the platform.”

ULL also unlocks gamification of live content. This includes micro wagering where friends bet against each other on the outcome of a line out for example with no money on the line. Another example, currently activated on social media before the event, is the boost to actual track speed that fans can give to their favourite drivers in Formula-E. “The closer in time you can link the interactivity between those consuming the content and the athletes the more powerful the application becomes,” notes Wilson.

Enhanced video streams could also be enabled for spectators at an event, viewing different camera angles with data overlays for example. “ULL allows you to really start to play with the live content,” says Wilson. “The more interactivity you have as rights holder the more you learn more about a consumer – which is the goal of DTC.”

For providers targeting sub-second applications there are alternative ULL protocols but they can’t be scaled via conventional CDNs and are therefore an expensive option requiring custom processing in the service provider’s network. For example, WebRTC is not http based and generally held more suitable for video conferencing.

That hasn’t stopped Phenix from marrying its real-time transcoding and distribution network with edge computing platform Videon to deliver less than half a second latency and synchronized viewing within three frames of video. Feeds are provided via SDI or HDMI to Videon’s edge platform where the sources are converted and packaged into SRT or RTMP streams. They are contributed to the Phenix cloud and transcoded into multi-bitrate WebRTC output for distribution. This enables a host of interactive use cases and at scale too, it claims, including for 500,000+ concurrent viewers for the 2021 Cheltenham Festival.

Phenix also points to its SyncWatch technology which ensures synchronous media “to all members of an audience, on all devices, regardless of device type, location, or connection, within 100 milliseconds — three frames of video at 30fps.”

Interactive applications include enabling viewers to watch up to seven angles of a game, multi-angle replay and micro-wagering on every play of a game.

“Unless you’re deploying something like WebRTC, it may be better to go live with a manageable degree of latency by not taking bets on events that could happen in the next few seconds,” says Duda. Examples being: Will they score this penalty? Sink this putt? Who will be the next scorer? Or will the next ball be hit for a boundary?

Personalisation

In addition to interactive applications, personalisation will be important to meet the needs of different audiences, whether that’s tech-savvy youngsters or the increasing number of women watching sports. The enabling technologies include low latency streaming at scale, elasticity, synchronisation and analytics.

“It requires an end-to-end environment that is optimised for delivery,” says Hungenaert. “Synamedia believe the use of the second screen while watching live sports is set to boom, whether fans are in the stadium, pub or living room. As anyone who has been to Wimbledon and hopes to see the player stats or the score elsewhere on their app will already know, 5G is important here. It also requires open APIs for integration with third party solutions.”

New technologies are enabling “extreme personalisation” in sports streaming, says Arnodin. For example, audiences can choose the view angle (mutli-camera streaming).

“Sports fans at home can totally immerse themselves in the stadium atmosphere from their living room,” he says. “They can also personalise the soundtrack – choosing the commentary in a different language; increase or decrease the volume of the commentary or the background stadium noise (or completely remove either).”

Amazon Prime debuted TNF with X-Ray statistics, Alexa integration and a simulcast of commentary from YouTube sports and comedy group Dude Perfect. Other areas of personalisation include re-watching the most important moments, as well as inserting targeted advertising using DAI.

“This requires higher bandwidth – as personalisation implies unicast – and a distributed edge caching and computing architecture,” says Arnodin. “The higher bandwidth accommodates the vast amounts of high-quality images and audio experience required for immersive video; the edge architecture makes it possible to reduce bandwidth on the network (caching), improve interactivity (low latency), and process content in the edge to personalise it (edge processing).”

Personalised sports content is in high demand. A survey from Quantum Market Research found that 96% of British soccer fans are willing to pay more for a subscription streaming service that provides a personalised experience. Instead of building deeper relationships with fans however, leagues and providers may actually be creating artificial barriers by failing to create a helpful user experience.

That’s according to Deloitte in a July survey which found US fans facing “an increasingly confusing mix of options to wade through to watch their favourite teams and events: home and mobile streaming services, regional networks, and cable/satellite channels.

Over half of sports fans surveyed said that they paid for a streaming video service to access sports content in the last year. But fans have some frustrations with their experiences, which could potentially reduce their level of overall engagement. Negative sentiments include feeling burdened by too many subscriptions (49%), frustrated by difficulties finding content (62%), and actually missing events they wanted to see because of these difficulties (54%).

Over half of sports fans surveyed said that they paid for a streaming video service to access sports content in the last year. But fans have some frustrations with their experiences, which could potentially reduce their level of overall engagement. Negative sentiments include feeling burdened by too many subscriptions (49%), frustrated by difficulties finding content (62%), and actually missing events they wanted to see because of these difficulties (54%).

To LTN’s Young the explosion of distribution channels, digital platforms, and viewing devices means customisation is an absolute necessity. “Streamers need to engage global audiences with personalised and localised streams to foster platform loyalty while maximising monetisation,” he says. “Media companies are buying valuable sports rights, but they need ways to brand, monetise and distribute content in a scalable and tailored fashion.”

Customisation workflows such as LTN Arc are claimed to simplify live event versioning at scale for digital audiences, enabling rightsholders to repurpose and decorate feeds into tailored streams for handoff across platforms.

“From a technical perspective, automated, fully managed workflows that seamlessly prepare content for various destinations, with watermarking, slating, video insertion, and ad trigger insertion to ensure seamless cross-platform reach and optimal monetisation are game-changing,” Young asserts.

Sports streamers want to be able to entertain fans before a game, at half-time and on the way home, as well as providing instant replays on personal devices during the game. There may even be added features like individual player cams and alternative angles to the fan’s point of view.

“Many fans will prefer to choose how to be entertained or informed at a stadium by their favourite streamer on their own device rather than rely on one feed through a fixed large screen at the stadium,” Duda poses. “The streamers that we work for are primarily aiming their services at sports fans at home, but the same services can be delivered by 5G in the stadium. The future will see AR, Virtual Seat and the Metaverse enabled at the stadium through 5G. Fans will be able to look through their AR glasses (or use their mobile) to get access to live stats on a player’s performance.”

Regionalisation is another key to connecting audiences with culturally relevant viewing experiences. But exporting premium, customised live sports content requires “a literal and technological translation”, according to Young. “Content providers should look for solutions to seamlessly activate remote announcers or voice-overs in the local language and custom graphics, active video clipping, highlights, and decorated event recording to create customised viewing experiences while fitting diverse and exacting specifications – in any country and on any platform.

As a case in point, is LTN’s work with a tier one sports league. This league is creating VIP subscription fan experiences by versioning the primary broadcast production with customized content delivered to digital platforms. In addition, the league is taking International and regionalised versions produced for other parts of the world and is bringing them back home to provide a richer portfolio of viewing options to viewers as part of the VIP experience.

QoE

The unpredictability of sports can put the video streaming platform under huge strain and require providers to deal with very dynamic workloads. Issues may be due to the volume of users and concurrency as well as the join rate, when a significant number of people are joining the stream simultaneously.

“The emphasis is on the distribution systems,” says Leclercq. “The origin servers, CDN, and multicast ABR solution need to be able to serve dynamic audiences with a consistent QoE. Secondly, service providers need to achieve a fine balance of reliability, latency, and quality on every single stream. This is a complex task, and there are times when visual quality may need to be capped to ensure playback consistency, or latency may need to be increased to avoid rebuffering problems.”

Here Leclercq says is paramount to gather data for refining the delivery configurations (i.e., bitrate, latency, CDN selection) with modern techniques like A/B testing capable of establishing the optimal configuration for each combination of device, network, and resolution.

Onboarding at scale

Payment systems also need to be reliable and agile. “Access tickets can often be bought at the very last minute in large numbers, causing significant congestion across payment services,” says Louise Seddon Global head of live services, Red Bee Media. “While broadcast streaming services tend to have payment packages to balance congestion more easily, on-demand models mean that many consumers may purchase streaming tickets simultaneously, causing added strain.”

Entitlement services also face similar stress levels caused by large volumes of users logging onto a service at the same time. Streaming providers need a mechanism to verify each consumer, meaning entitlement gateways need to be highly efficient.

“Overcoming connectivity loads for large-scale live streaming events can be compared with entering a stadium at any major in-person event,” she says. “Multiple entry points and staggered access is paramount. Advanced streaming platforms utilise creative content strategies to balance entry loads in a more manageable way, such as providing consumers with exclusive, backstage content experiences to draw viewers in ahead of event start times.”

Connectivity is another vital element where online services face huge spikes at the start of any live event. Sneddon says streaming infrastructure needs to be integrated with a sufficient number of CDN nodes to balance connectivity loads and handle inevitable spikes.

Ateme’s solution for this is ‘audience-aware encoding.’ The CDN links to the encoder to communicate how content is actually being watched. With this data, the encoder optimises the ABR ladder, adapting it to fit viewers’ needs while eliminating unnecessary profiles. This reduces network traffic without reducing video quality – simply by cutting waste.

Upping the format

Several premium sporting events are already being streamed in UHD but the challenges remain the same – “balancing the trade-off between network congestion and video quality,” says Arnodin.

OTT services have traded off some aspects of QoE to deliver UHD experiences. For example, BBC iPlayer — the only platform in the UK to stream Euro 2020 football matches — delivered the Euro 2020 final to 6.29 million concurrent sessions. However, many users complained about the additional latency of up to a minute on the UHD stream compared with the HD broadcast.

LONDON, ENGLAND – JULY 07: Harry Kane of England celebrates after scoring their side’s second goal during the UEFA Euro 2020 Championship Semi-final match between England and Denmark at Wembley Stadium on July 07, 2021 in London, England. (Photo by Eddie Keogh – The FA/The FA via Getty Images)

“To scale UHD delivery, a trend of increased collaboration between OTT service providers and ISPs connecting end users has emerged,” says Leclercq. “An excellent example is TIM (Telecom Italia) delivering UHD sports streams. TIM combines multicast capabilities in the network with ABR to scale its video streaming to more than 3 million subscribers. Moreover, TIM has opened its multicast ABR distribution system to OTT providers like DAZN and Mediaset, enabling them to benefit from the reliable distribution on TIM’s network when delivering sports content to subscribers.”

Besides these optimisations, new standardised codecs like VVC also play a role in reducing the bandwidth required for a given video resolution by 40%.

8K is being tentatively explored as a service differentiator or additional revenue stream. BT Sport has trialled 8K broadcast and the Beijing Winter Games featured 8K VR. For better visual quality VR headsets need higher resolution screens and the source feed would ideally be culled from 16K (as was claimed by the producers of the Beijing Olympics VR experience).

UHD also includes parameters such as HDR, higher frame rate and immersive audio that have a strong impact on user experience. Any of these shifts becomes a largely economic decision to budget for increased bandwidth. “Smaller players may take more pragmatic approaches to enhance their coverage compared with major broadcasters carrying premium sports events such as the Olympics or UEFA Champions League football,” says Seddon. “While UHD coverage can be easily supported with existing OTT solutions, more affordable alternatives to improving viewer experience include enabling increased frame rates.”

“Arguably higher frame rate is more important for sports than 8K,” agrees Wilson. “But to make 120fps viable you’d essentially have to rip out the entire production infrastructure and replace it because nothing will support that today. Maybe 8K HFR supported by AI/ML will be able to create that ex uplift for the viewer.”

In short, “If you throw enough resources, everything can be done with incredible quality,” says Hungenaert, “but it’s not viable if it breaks the bank.”

Web3 and Metaverse

One way of adding to the bank is unlock new revenue from Web3 technologies and applications. The starter for this is opening the market for digital merchandise in which fans pay to own a NFT of unique items.

FIFA+ Collect is among the latest digital collectibles to launch enticing join-up with ‘VIP experience giveaways’ for fans to attend the World Cup in Qatar.

Deloitte predicts the sports market will generate more than $2bn n NFT sales this year. Among the largest is Sorare which has deals with the top five major soccer leagues including the Bundesliga to market video-based tokens. Eventually, Deloitte predict that all sports are likely to have some form of NFT offering.

Moonsault is conceived as a model for Web3 video implementation by sports rights holders. The platform is a way of further monetising WWE’s video archives and engaging fans before and after airing of televised matches. Content is created by Blockchain Creative Labs (the NFT business and creative studio formed by FOX Entertainment and Bento Box Entertainment) and runs on Eluvio Content Blockchain.

While this is based on archive video activations during a live event is “not out of the realm of possibility,” says Michelle Munson, co-founder & CEO of Eluvio.

In fact, Eluvio is partnering with an yet to be named sport competition in Australia in a live streamed pilot. This would potentially cut out traditionally third-party licensees of content – broadcasters and streaming service providers – from the value chain.

“We can harness this tech to make a new way for publishers, creators and fans to be around media and to disintermediate [traditional] value extraction,” says Munson.

Warner Bros. Discovery Sports Europe is also engaging in the technology. It is working with digital producers Infinite Reality for activations of virtual content during the UCI Track Champions League in December.

“This will include a direct interaction with riders by fans in private podcasts and session before during and after the competition hosted in the Metaverse.

“Fans will have the possibility to question riders as if they were accredited media. That’s the biggest difference to a standard web stream,” says François Ribeiro, head of discovery sports events. “For us, we are learning how we can engage the audience and how we position it differently to other products.”