After more than 40 years of operation, DTVE is closing its doors and our website will no longer be updated daily. Thank you for all of your support.

YouTube removes 7.8m videos for breaching site rules in Q3

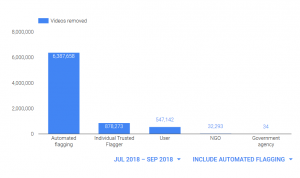

YouTube removed 7.8 million videos that violated the platform’s guidelines in the three months from July to September, according to company stats.

YouTube said that 81% of the removed videos were first detected by machines, not viewers, and that 74.5% of these machine-detected videos had never been viewed.

YouTube said that 81% of the removed videos were first detected by machines, not viewers, and that 74.5% of these machine-detected videos had never been viewed.

“The vast majority of attempted abuse comes from bad actors trying to upload spam or adult content,” said YouTube in a blog post.

“Over 90% of the channels and over 80% of the videos that we removed in September 2018 were removed for violating our policies on spam or adult content.”

The figures are contained in the video giant’s latest quarterly YouTube Community Guidelines Enforcement Report – something it introduced in April this year as part of its commitment to transparency.

When YouTube detects a video that violates its guidelines it removes it and applies a ‘strike’ to the channel that uploaded it. Entire channels are removed if they are dedicated to prohibited content or “contain a single egregious violation, like child sexual exploitation.”

YouTube said that videos containing violent extremism or child safety issues are the most egregious types of content it has to deal with but are also “low volume” areas.

“Our significant investment in fighting this type of content is having an impact,” said YouTube. “Well over 90% of the videos uploaded in September 2018 and removed for violent extremism or child safety had fewer than 10 views.”

YouTube said that 10.2% of its video removals were for child safety, while child sexual abuse material represents “a fraction of a percent of the content we remove”.

In terms of clamping down on user comments that violate its guidelines, YouTube removed more than 224 million comments on videos in the third quarter.

It said that the majority of these were spam comments and the total number of removals represents a fraction of the billions of comments posted on YouTube each quarter.

YouTube uses a mixture of smart detection technology and human reviewers to flag, review, and remove videos and abusive or spam messages. YouTube introduced new guidelines to take a tougher stance on hateful, demeaning and inappropriate content in 2017.